VR or 3D audio is often referred to as spatialization, and spatial audio is like sound coming from a specific location in 3D space. This technique is critical to providing deep immersion, as sound gives us important clues to let us know where we are in a real three-dimensional environment. As with positioning technology, spatialization depends on two key factors: direction and location.

HRTF (head related transformation function) and directional spatialization

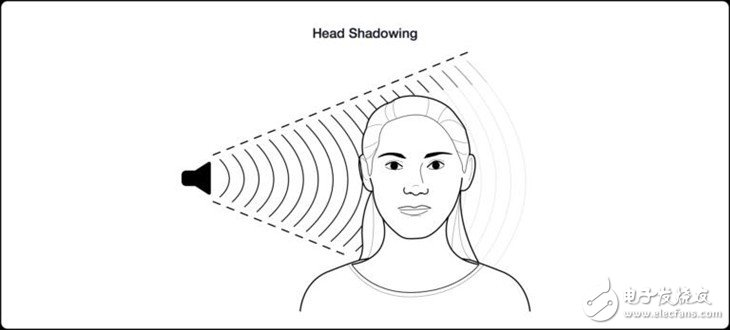

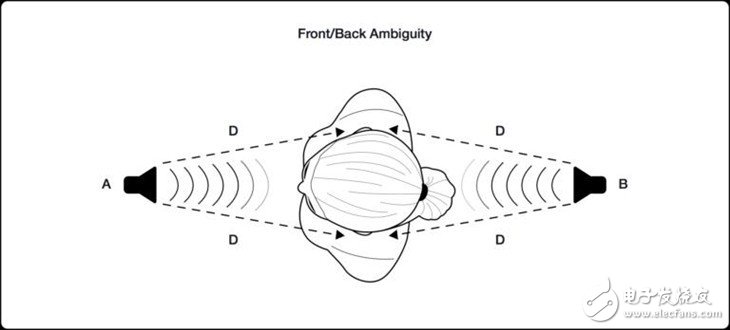

Depending on the direction of the source, the geometry and structure of our ears will change the sound accordingly. The Head Related Transformation Function (HRTF) is based on these different effects and is then used to locate the sound.

How to record HRTF

To collect the HRTF, people need to enter the anechoic chamber, put on the headphones, then play the sound in every possible direction and record those sounds through the headphones. The recorded sound is compared with the original sound to calculate the specific HRTF data. This is because the two ears and the available sample set need to record sound in a considerable number of discrete directions. Of course, not everyone has the same physical characteristics, and it is impossible to record everyone's HRTF, so labs like Microsoft Research and Oculus will use a common reference set for most situations, especially when it comes to the head. Track the reference when combined.

HRTF application

After preparing the HRTF set, if the developer knows where they want the sound to appear or where it is coming from, they can choose the appropriate HRTF data and apply it to the sound. This is done in the form of so-called "time domain convolution" or "FFT/IFFT". So these companies basically filter the audio signal so that the sound sounds like it comes from a particular direction. It sounds like it's easy, but it's actually very expensive and hard to develop.

In addition, headphones must be used because the speaker array makes things more complicated.

Head tracking

People use head movements to identify and locate sounds in space. Without this, our ability to position sound in three-dimensional space will be greatly reduced. When people turn their heads to the side, developers must ensure that they can audibly reflect this movement. Otherwise, the sound will make people feel false and lack immersion.

High-end headphones can already track people's head orientation and even position them in some cases. Therefore, if the developer adds head orientation information to the sound package, they will be able to project immersive sound effects in 3D space.

Distance modeling

The HRTF can help companies identify the direction of the sound, but it does not help identify the distance of the sound. People use a variety of conditions to determine or assume the distance of the sound source, which can be simulated by software:

Loudness

This is probably the easiest one and the most reliable tip for us. Developers can reduce the loudness of the sound based on the distance between the user and the sound source.

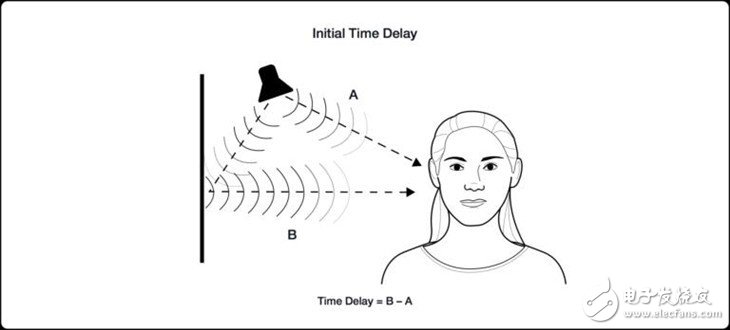

Initial time delay

This is difficult to replicate and it requires an early reflection to be calculated based on a given set of geometries and their characteristics. This is also very expensive and complicated to operate.

Direct/reverberant

Any system that intends to model late reverberation and reflection in an accurate manner will have such a result. These systems are usually very expensive.

High frequency attenuation

This is because air absorption is not obvious, but it is easily modeled by a simple low-pass filter and by adjusting the slope and cutoff frequency. High-frequency attenuation is not as important as other distance cues, but we can't ignore its role.

Mini Substation,Solar Power Substation,Step-Up Solar Power Substation,Step-Up Transformer

Hangzhou Qiantang River Electric Group Co., Ltd.(QRE) , https://www.qretransformer.com