Speech recognition is "invading" our lives. Our mobile phones, game consoles, and smart watches all have built-in speech recognition. He is even automating our house. For $50, you can buy an Amazon Echo Dot, a magic box that lets you order takeaways, listen to weather forecasts, and even buy garbage bags. All you have to say is this:

Aleax, give me a pizza!

Echo Dot was popular on the Christmas holiday in 2015 and sold out immediately on Amazon.

But in fact, voice recognition has existed for many years. Why does it become mainstream now? Because depth recognition finally improves the accuracy of speech recognition in an uncontrolled environment to a level that is practical enough.

Professor Wu Enda once predicted that when the accuracy of speech recognition increases from 95% to 99%, it will become the primary means of interacting with computers.

Let's learn and deep learn the pronunciation room recognition!

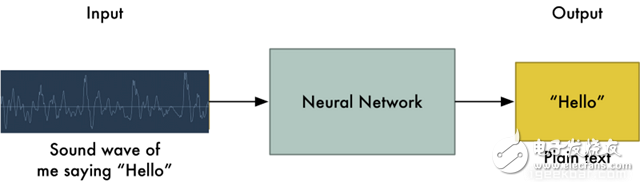

Machine learning is not always a black boxIf you want to know how neural machine translation works, you should guess that we can simply send some sound to the neural network and train it to generate text:

This is the highest pursuit of speech recognition using deep learning, but unfortunately we have not yet done this fully (at least not when the author wrote this article - I bet that in a few years we can do To)

One big problem is the difference in speed. One person may soon say "Hello!" and another person may say "heeeellllllllllllloooooo!!" very slowly, resulting in a sound file with more data and longer. Both files should be Identified as the same text - "Hello! As it turns out, it's very difficult to automatically align audio files of various lengths to a fixed-length text.

In order to solve this problem, we must use some special techniques and perform some special processing beyond the deep neural network. Let us see how it works!

Convert sounds to bitsObviously, the first step in speech recognition is – we need to input sound waves into the computer.

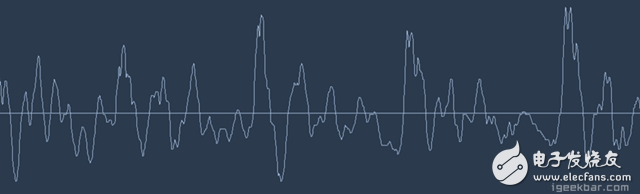

How should we convert sound waves into numbers? Let's use the sound fragment of "hello" I say as an example:

Sound waves are one-dimensional, and they have a value based on their height at each moment. Let's zoom in on a small part of the sound wave:

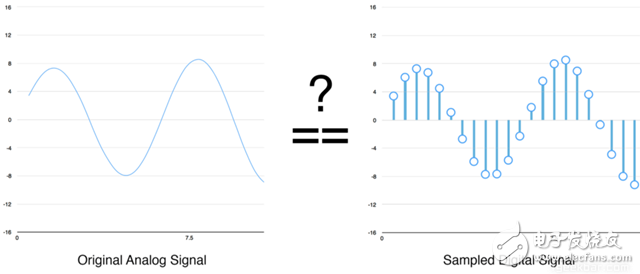

In order to convert this sound wave into a number, we only record the height of the sound wave at the equidistant point:

This is called sampling. We read thousands of times per second and record the sound waves at a height at that point in time. This is basically an uncompressed .wav audio file.

The "CD sound quality" audio is sampled at 44.1khz (44100 readings per second). But for speech recognition, the sample rate of 16khz (16,000 samples per second) is enough to cover the frequency range of human speech.

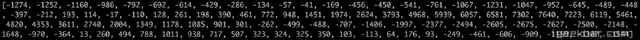

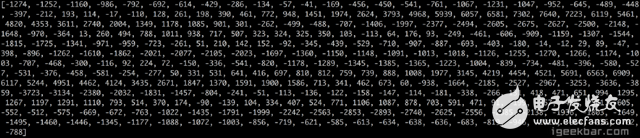

Let us sample the "Hello" sound wave 16,000 times per second. Here are the first 100 samples:

Each number represents the amplitude of sound waves at 16,000th of a second.

Digital sampling assistantBecause sound wave sampling is only an intermittent read, you may think that it is only a rough approximation of the original sound wave. There is a gap between our readings, so we are bound to lose data, right?

However, due to the sampling theorem (Nyquist theorem), we know that we can use mathematics to perfectly reconstruct the original sound wave from the sampling interval - as long as our sampling frequency is at least twice faster than the expected maximum frequency.

I mention this because almost everyone makes this mistake and mistakenly believes that using a higher sample rate will always result in better audio quality. It is not really.

Preprocessing our sampled sound dataWe now have a series of numbers, where each number represents the 1/16000 second sound wave amplitude.

We can enter these numbers into neural networks, but it is still difficult to attempt to directly analyze these samples for speech recognition. Instead, we can make the problem easier by doing some preprocessing of the audio data.

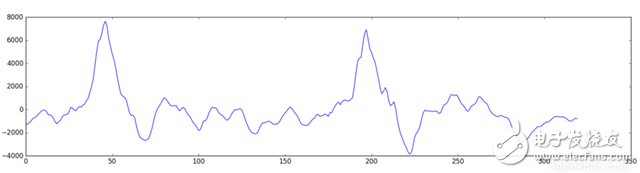

Let's begin by first dividing our sampled audio into 20-millisecond blocks of audio. This is our first 20 milliseconds of audio (ie our first 320 samples):

Drawing these numbers as a simple line chart, we get the approximate shape of the original sound wave within 20 milliseconds:

Although this recording is only 1/50 second in length, even such brief recordings are complicatedly composed of sounds of different frequencies. There are some bass, some midrange, and even a few high notes. But in general, it is these sounds of different frequencies that are mixed together to form the human voice.

In order to make this data more easily processed by neural networks, we will decompose this complex sound wave into its components. We will separate the bass part and separate the next lowest part, and so on. Then the energy in each frequency band (from low to high) is added, and we create a fingerprint for each category of audio clips.

Imagine you have a recording of someone playing a C major chord on the piano. This sound is a combination of three notes: C, E, and G. They mix together to form a complex sound. We want to distinguish the C, E and G by decomposing this complex sound into individual notes. This is the same reason as speech recognition.

We need a Fourier Transform to do this. It breaks complex sound waves into simple sound waves. Once we have these separate sound waves, we add together the energy contained in each frequency band.

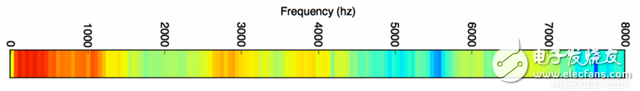

The end result is the importance of each frequency range from bass (ie bass notes) to high notes. With a frequency band of 50hz, the energy contained in our 20ms audio can be expressed as the following list from low frequency to high frequency:

But it's easier to understand when you draw them into a chart:

As you can see, there is a lot of low-frequency energy in our 20 millisecond sound segment, but there is not much energy at the higher frequencies. This is a typical "male" voice.

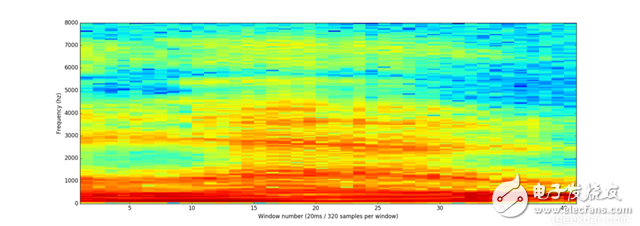

If we repeat this process for each 20-millisecond block of audio, we end up with a spectrogram (each column is a 29-millisecond block of audio from left to right)

Spectrograms are cool because you can actually see the notes and other pitch patterns in the audio data. For neural networks, it is much easier to find patterns from this data than the original sound waves. Therefore, this is the data representation that we will actually input into the neural network.

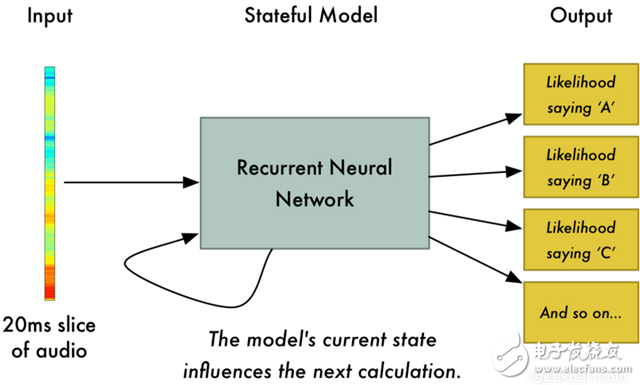

Recognize characters from short audioNow that we have turned audio into an easy-to-handle format, we will now import it into deep neural networks. The neural network input will be a 20 millisecond audio block. For each small audio slice, the neural network will try to find out which letter the currently speaking sound corresponds to.

We will use a recurrent neural network—a neural network with memory that can influence future predictions. This is because each letter it predicts should be able to influence its prediction of the next letter. For example, if we have said "HEL" so far, it is very likely that we will next say "LO" to complete "Hello". We are unlikely to say something like "XYZ" that cannot be read at all. Therefore, having previously predicted memory helps the neural network to make more accurate predictions for the future.

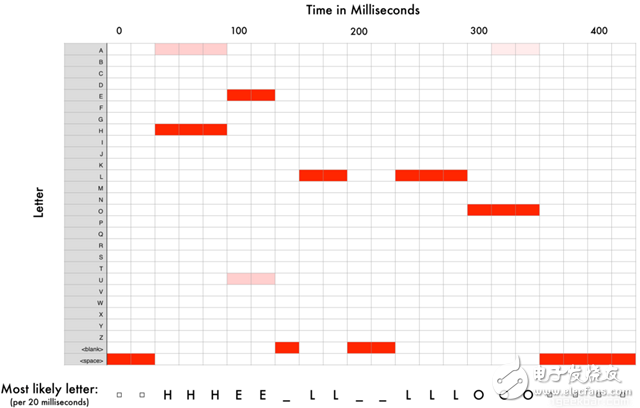

After we finish our entire audio clip (one block at a time) through the neural network, we will finally get a mapping that identifies each audio block and its most likely corresponding letter. Here is a rough pattern of the mapping I used to say "Hello":

Our neural network is predicting that the word I am talking about is likely to be "HHHEE_LL_LLLOOO". However, at the same time, it also thinks that what I said may also be "HHHUU_LL_LLLOOO" or even "AAAUU_LL_LLLOOO".

We can follow some steps to organize this output. First, we will replace any duplicate character with a single character:

HHHEE_LL_LLLOOO becomes HE_L_LO

HHHUU_LL_LLLOOO becomes HU_L_LO

AAAUU_LL_LLLOOO becomes AU_L_LO

Then we will remove all blanks:

HE_L_LO becomes HELLO

HU_L_LO becomes HULLO

AU_L_LO becomes AULLO

This gives us three possible conversions - "Hello," "Hullo," and "Aullo." If you say these words out loud, all these sounds are similar to "Hello." Because the neural network only predicts one character at a time, it will draw some transcriptions that are purely representative of pronunciation. For example, if you say "He would not go", it may give a "He wud net go" transfer.

The trick to solving the problem is to combine these pronunciation-based predictions with probability scores based on large databases of written texts (books, news articles, etc.). Throw away the most unlikely results and leave the most realistic results.

In our possible transcription of "Hello," "Hullo," and "Aullo," it is clear that "Hello" will appear more frequently in text databases (not to mention in our original audio-based training data). It may be a positive solution. So we will choose "Hello" as our final result, not other transfer. Get it!

Wait a minute!

You may think "How do you say if someone says Hullo"? The word does exist. Perhaps "Hello" is the wrong transfer!

Of course, someone may actually say "Hullo" instead of "Hello." However, such a speech recognition system (based on American English training) does not basically produce such a transfer result as "Hullo." The user says "Hullo", it always thinks that you are saying "Hello", no matter how much you sound "U".

Give it a try! If your phone is set to American English, try to have your phone assistant recognize the word "Hullo." This will not work! It quits the desk and it always understands it as "Hello."

Not recognizing "Hullo" is a reasonable behavior, but sometimes you will encounter an annoying situation: your cell phone can't understand what you say is valid. This is why these speech recognition models are always in a state of retraining, and they need more data to fix these few cases.

Can I build my own speech recognition system?One of the coolest things about machine learning is that it sometimes looks very simple. You get a bunch of data, enter it into machine learning algorithms, and magically get a world-class AI system running on your graphics card... right?

This is true in some cases but not for speech recognition. Speech recognition is a difficult problem. You have to overcome almost endless challenges: poor microphones, background noise, reverb and echo, differences in accents, and more. Your training data needs to include all of this to ensure that the neural network can deal with them.

Here's another example: Do you know that when you speak in a noisy room, you unconsciously raise your pitch to overshadow the noise. Humans can understand you under any circumstances, but neural networks need training to deal with this special situation. So you need training data that people speak loudly in noise!

To build a can in Siri, Google Now! Or voice recognition systems running on platforms like Alexa, you will need a lot of training data. If you don't hire hundreds of people to record for you, it requires much more training data than you can get. Because the user's tolerance for a low-quality speech recognition system is low, you cannot embarrass yourself. No one wants a speech recognition system that is only effective for 80% of the time.

For companies like Google or Amazon, the tens of thousands of hours of vocal voice recorded in real life is gold. This is where you can open up the gap between their world-class speech recognition system and your own system. Let you use Google Now for free! Or Siri, or just $50 to buy Alexa without the subscription fee means: Let you use them as much as possible. Everything you say about these systems will be recorded forever and used as training data for future versions of speech recognition algorithms. This is their true purpose!

do not trust me? If you have a Google Now installed! For Android phones, click here to listen to every word you said to it:

You can find the same thing on Amazon via Alexa. However, unfortunately, Apple does not let you access your Siri voice data.

Therefore, if you are looking for a startup idea, I do not recommend that you try to establish your own voice recognition system to compete with Google. Instead, you should think of a way for people to give you a few hours of recordings. This data can be your product.

Guangdong Ojun Technology Co., Ltd. , https://www.ojunconnector.com